On 22 July 2016 at 15:15, Andrew Smith <me andrewmichaelsmith com> wrote:

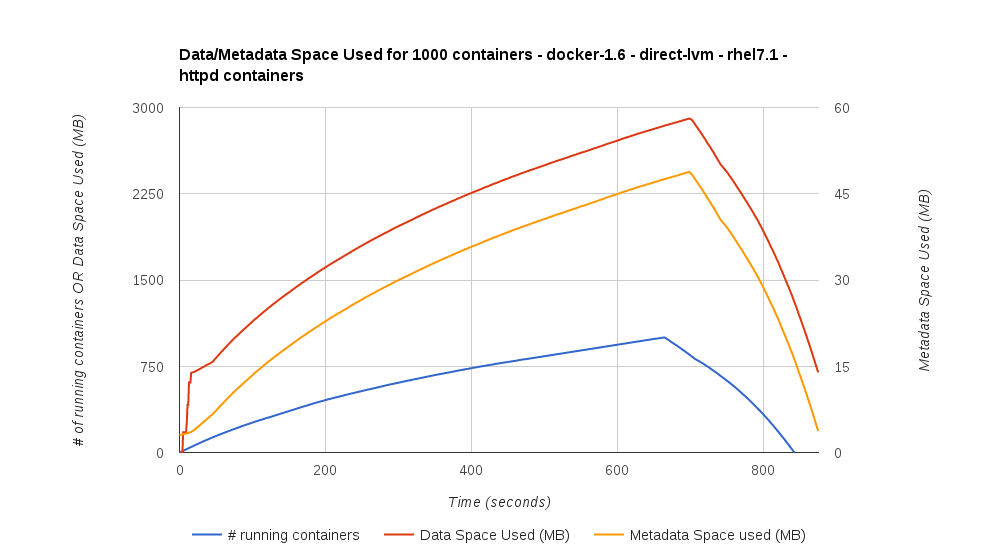

Hi JeremyThanks for the response! Do you need a maxed out lsblk or just our general setup? Here's a happy (not 100% metadata) box:[15:13:09] root kubecltest-0:/home/company# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTxvda 202:0 0 512M 0 disk└─xvda1 202:1 0 511M 0 part /bootxvdb 202:16 0 20G 0 disk /xvdc 202:32 0 20G 0 disk├─docker-docker--pool_tmeta 253:0 0 24M 0 lvm│ └─docker-docker--pool 253:2 0 19.6G 0 lvm└─docker-docker--pool_tdata 253:1 0 19.6G 0 lvm└─docker-docker--pool 253:2 0 19.6G 0 lvmxvdd 202:48 0 1G 0 disk [SWAP][15:13:22] root kubecltest-0:/home/company# docker infoContainers: 1Images: 322Storage Driver: devicemapperPool Name: docker-docker--poolPool Blocksize: 524.3 kBBacking Filesystem: extfsData file:Metadata file:Data Space Used: 2.856 GBData Space Total: 21.04 GBData Space Available: 18.18 GBMetadata Space Used: 4.42 MBMetadata Space Total: 25.17 MBMetadata Space Available: 20.75 MBUdev Sync Supported: trueDeferred Removal Enabled: falseLibrary Version: 1.02.107-RHEL7 (2015-12-01)Execution Driver: native-0.2Logging Driver: json-fileKernel Version: 3.10.0-327.10.1.el7.x86_64Operating System: CentOS Linux 7 (Core)CPUs: 1Total Memory: 989.3 MiBName: kubecltest-0ID: UTBH:QETO:VI2M:E2FI:I62U:CICM:VR74:SW3U:LSUZ:LEKY:BRTW:TQNIWARNING: bridge-nf-call-ip6tables is disabledAny more info just let me know.ThanksAndyOn 22 July 2016 at 13:33, Jeremy Eder <jeder redhat com> wrote:+VivekI don't know the exact bug here, but one possibility is that since you've disabled the auto-extend, that it's leaving the metadata size at it's default? There is indeed a relationship between the two and I did a lot of testing around it to make sure we were not going to hit what you've hit :-/ At that time however, we did not have the auto-extend feature.So I think you may have found a situation where we need METADATA_SIZE=N but you said you set that at 2%?Can you paste the output of "docker info" and "lsblk" please?From April 2015 when I last looked at this, the tests ran were to start 1000 httpd containers and measure the thinpool used size. Here:

So, for 1000 rhel7+httpd containers, on-disk it was using about 3GB (left-Y-axis).And the metadata consumed was about 50MB (right-Y-axis).You can see we concluded to set metadata @ 0.1% of the data partition size, which in the case:I am wondering what your docker info will say.--On Fri, Jul 22, 2016 at 8:08 AM, Andrew Smith <me andrewmichaelsmith com> wrote:HiWe've been using docker-storage-setup (https://github.com/projectatomic/docker-storage-setup) to set up our CentOS LVM volumes.Because our block devices are fairly small and our containers don't write data inside them (and we hit issues with resizes being very slow) we have a static setup:AUTO_EXTEND_POOL=FalseDATA_SIZE=98%VGThe intention is to just create the lv as big as we can up front, because we don't need that space for anything else. We leave 2% for metadata.However - we're running in to a problem, where our metadata volume is hitting 100% before out data volume gets close (sometimes, 60%). We're deploying kubernetes on these boxes, which garbage collects based on data usage not metadata usage, so we are often hitting the 100% and taking outboxes.What I'm struggling to understand is how much metadata a docker image or docker container uses. If I can find that out I can figure out a good data:metadata ratio. Is there a way for me to discover this?Or, as I suspect, am I missing something here?Thanks--Andy Smithhttp://andrewmichaelsmith.com | @bingleybeep-- Jeremy Eder--Andy Smithhttp://andrewmichaelsmith.com | @bingleybeep

Andy Smith

http://andrewmichaelsmith.com | @bingleybeep